I haven’t had this much fun in a long time writing a few lines of sample code and getting it to work. Calling the REST API for the #dalle2 in #azureopenai service was a breeze. What made it fun were the bugs in the example code generated by the DallE2 Preview Playground page in the Azure Portal and while fixing them I was able to learn how the service works. You don’t need to deploy a model in Azure for this REST service, unlike the text completion Davinci models. I also played around with the Text completion Davinci models using both AzureOpenAI and #openai APIs directly as well.

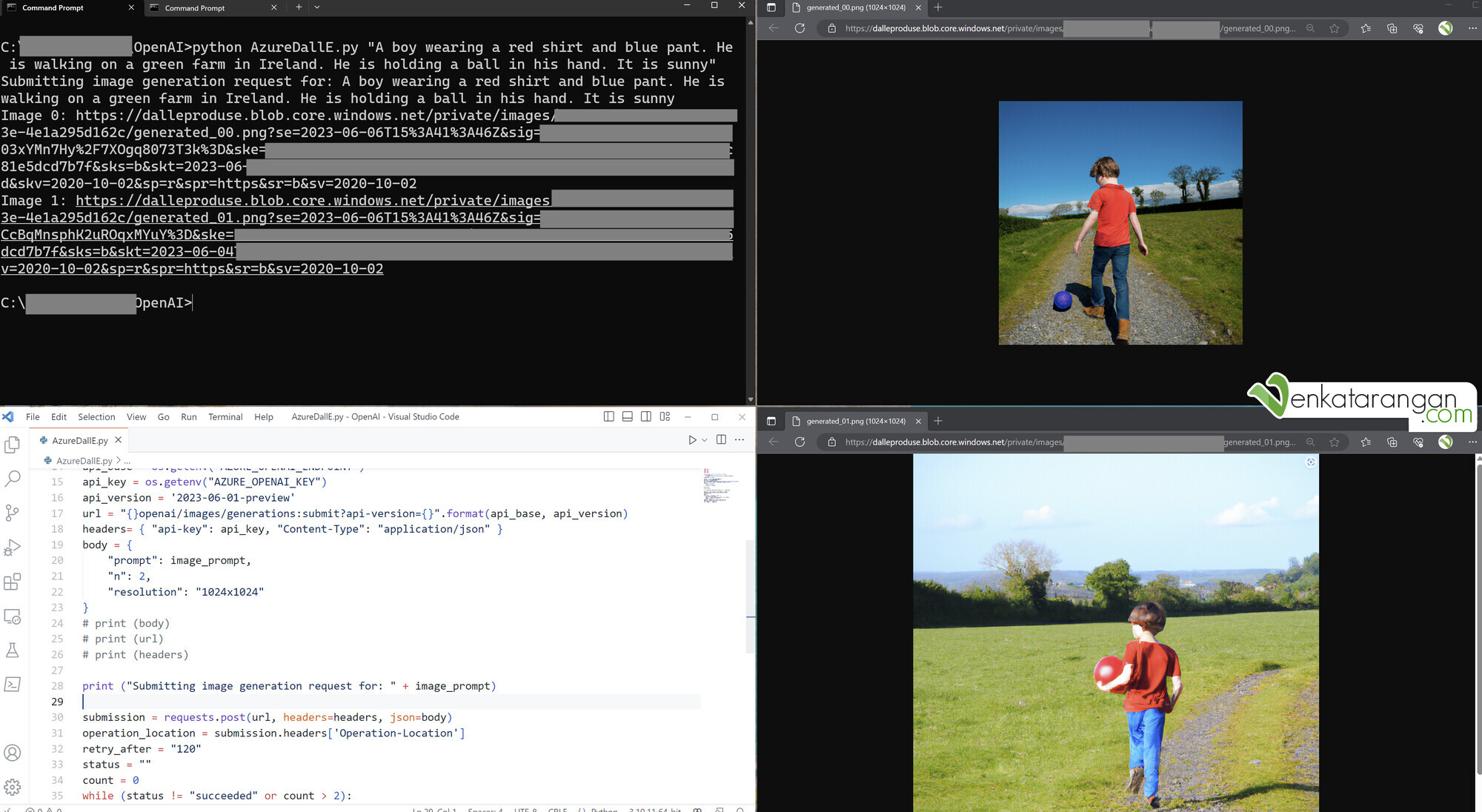

import os

import requests

import sys

import time

# Get the prompt for image from the command line arguments

if len(sys.argv) < 2:

print("Please provide the image file name as a command line argument.")

sys.exit(1)

image_prompt = sys.argv[1]

api_base = os.getenv("AZURE_OPENAI_ENDPOINT")

api_key = os.getenv("AZURE_OPENAI_KEY")

api_version = '2023-06-01-preview'

url = "{}openai/images/generations:submit?api-version={}".format(api_base, api_version)

headers= { "api-key": api_key, "Content-Type": "application/json" }

body = {

"prompt": image_prompt,

"n": 2,

"resolution": "1024x1024"

}

print ("Submitting image generation request for: " + image_prompt)

submission = requests.post(url, headers=headers, json=body)

operation_location = submission.headers['Operation-Location']

retry_after = "120"

status = ""

count = 0

while (status != "succeeded" or count > 2):

time.sleep(int(retry_after))

response = requests.get(operation_location, headers=headers)

status = response.json()['status']

count = count + 1

image_url0 = response.json()['result']['data'][0]['url']

image_url1 = response.json()['result']['data'][1]['url']

print ("Image 0: " + image_url0)

print ("Image 1: " + image_url1)

Output from Dall.E2 service in Azure OpenAI

Comments