சில நாட்களுக்கு முன் நண்பர் மணி மணிவண்ணன் அவரது பேஸ்புக் பக்கத்தில், தமிழ்நாட்டுப் பாடநூல் நிறுவனம், சென்னை, 1985இல் வெளியிட்ட”தமிழ்- தமிழ் அகரமுதலி” என்ற நூல் இலவசமாக மின் புத்தக வடிவில் கிடைக்கிறது எனவும், அதன் இணைப்பையும் கொடுத்திருந்தார். பயனுள்ள நூல் இது. உடனே பதிவிறக்கம் செய்தேன்.

தமிழ் இணையக் கல்விக்கழகம் இந்த நூலை நல்ல முறையில் வருடி, நகல் எடுத்திருக்கிறார்கள். ஒரே ஒரு குறை, மின் நூலில், தமிழில் தேட முடியவில்லை – அது ஏனென்றால், எளிதாகக் கிடைக்கும் ஒளி எழுத்துணரி செயலிகளில், தமிழ் இப்போது தான் வந்திருக்கிறது. அதனால் இறக்கம் செய்த மின் நூலை தேசாரக்ட் என்னும் இலவச செயலியைக் கொண்டு ஒளி எழுத்துணரிச் செய்து புதிய பதிவாகக் கொடுத்துள்ளேன். அதை எப்படிச் செய்தேன் என்பதைக் கீழே சொல்லியுள்ளேன்.

Recently my friend Mr Mani Manivannan had shared a link to download for free, a Tamil-Tamil-Dictionary published in 1985 by Tamil Nadu Text Book and Educational Services Corporation as a PDF ebook. Unfortunately, the file was not searchable in Tamil, as the OCR facility to understand Tamil text had come out for wider use, only in the recent months.

I tried to fix it. I started by downloading the ebook (PDF file) from Archive.org, then performed OCR using the Open Source app called Tesseract – I had talked about this wonderful tool at the Tamil Internet Conference 2019. Tesseract reads each page as a picture, performs character recognition, that means it figures out the text that’s in the image into a string of Unicode text. The accuracy of OCR is not 100% but certainly usable. Tesseract then attaches (invisibly as meta-data) the Unicode text into the image with a marker to indicate the location from where it had read it, in a technique called Embedded Text in a PDF. Then, it writes for all the pages, the image and the associated Unicode text into a new output PDF file.

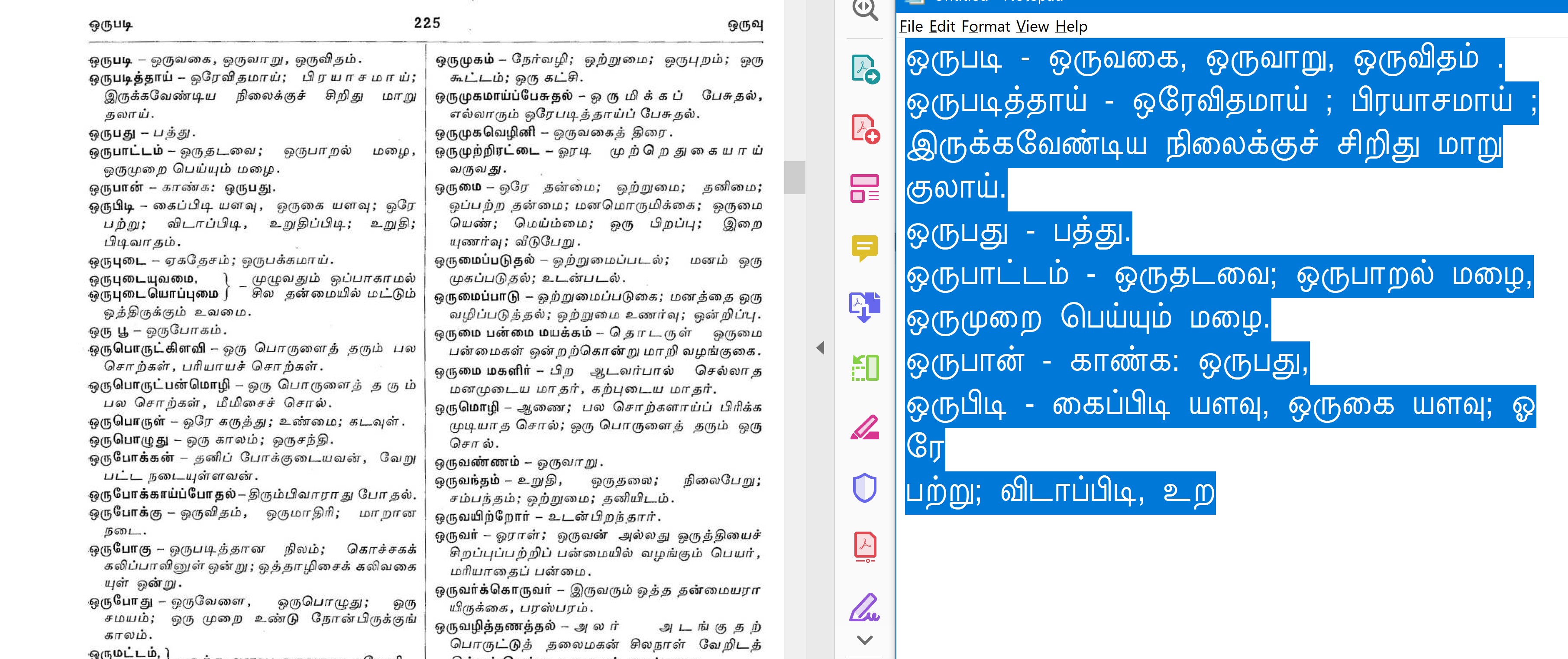

From the PDF file (on the left) I am able to copy ‘n’ paste the text in Tamil to Notepad (on the right) in Unicode format seamlessly – The accuracy is not 100% but certainly usable

Searching in the ebook in Tamil works well

Below are the three batch files that I wrote to run in Windows 10, which does the necessary steps.

Step 1: Tesseract accepts as input, only image files like JPG, GIF or TIFF. So, we need to extract every page from the PDF file as individual images. I am using Ghostscript utility to do this and you can download it from here. The batch file creates the image files with the fixed name of __inputpages*.png, you may make the batch file generic by accepting the output filename as a command-line parameter.

echo off echo usage: PDF2OCT_STEP1.bat inputfile.pdf rem About: Generates an image for each page in a PDF rem 1.Ghostscript should be installed and the folder be in the path rem 2.Ghostscript: https://www.ghostscript.com/download/gsdnld.html rem 3.Creates temp files called __inputpages*.png gswin64.exe -dNOPAUSE -dBATCH -r600 -sDEVICE=png16m -sOutputFile="__inputpages-%%05d.png" %1

The dictionary ebook referenced above was about 1000 pages and this step took about 40 minutes on my Core i7, 16 GB, SSD laptop running Windows 10.

Step 2: Is to generate a text file containing the list of all __inputpages.png in the folder. Then run the tesseract engine against each image file. The command-line parameters to tesseract.exe specify the language (I have given as Tamil and English in that order), and the output file to be in PDF format. This second batch file requires the input image files to be named as __inputpages.png (the same as the output from Step 1).

echo off echo usage: PDFOCR_STEP2.bat outputfile rem About: Does OCR for Tamil for each of the images and then combines them into one PDF rem 1.Tesseract.exe has to be installed and be in the path: https://github.com/tesseract-ocr/tesseract rem 2.NO input file to be specified and NO .pdf in the output file name rem 3.creates temp files called __inputpages*.png and __inputfiles.txt dir /b __inputpages*.png >__inputfiles.txt tesseract -l tam+eng __inputfiles.txt %1 pdf

For the 1000+ pages in the dictionary, ebook referenced above this step took about 4-7 hours (my machine had gone to sleep mode a few times in between) on my Core i7, 16 GB, SSD laptop running Windows 10.

Step 3: The last step is to use Ghostscript to compress the PDF file to make it suitable for reading as an ebook, into a new PDF file.

echo off echo usage: PDF2OCT_STEP3.bat Inputfile.pdf OutputFile.pdf rem About: Compresses a PDF to be an ebook rem 1.Ghostscript should be installed and be in the path gswin64.exe -sDEVICE=pdfwrite -dCompatibilityLevel=1.4 -dPDFSETTINGS=/ebook -dNOPAUSE -dQUIET -dBATCH -sOutputFile=%2 %1

Scanning

If you are scanning (say an old book), for quicker completion you will be scanning two facing pages at once. While it may save scanning time, it will be inconvenient to read it on-screen as a PDF and to apply OCR (as instructed above through Tesseract). It is better to split them into individual pages as in the original book, remove the noises and the brown haze in all the pages. To do this, there is an awesome tool called Scan Tailor – it is open-source and free. The tool requires a bit of learning – you can start with the quick start video or the step-by-step instructions in their wiki – remember that the tool accepts image files as input and produces image files as output.

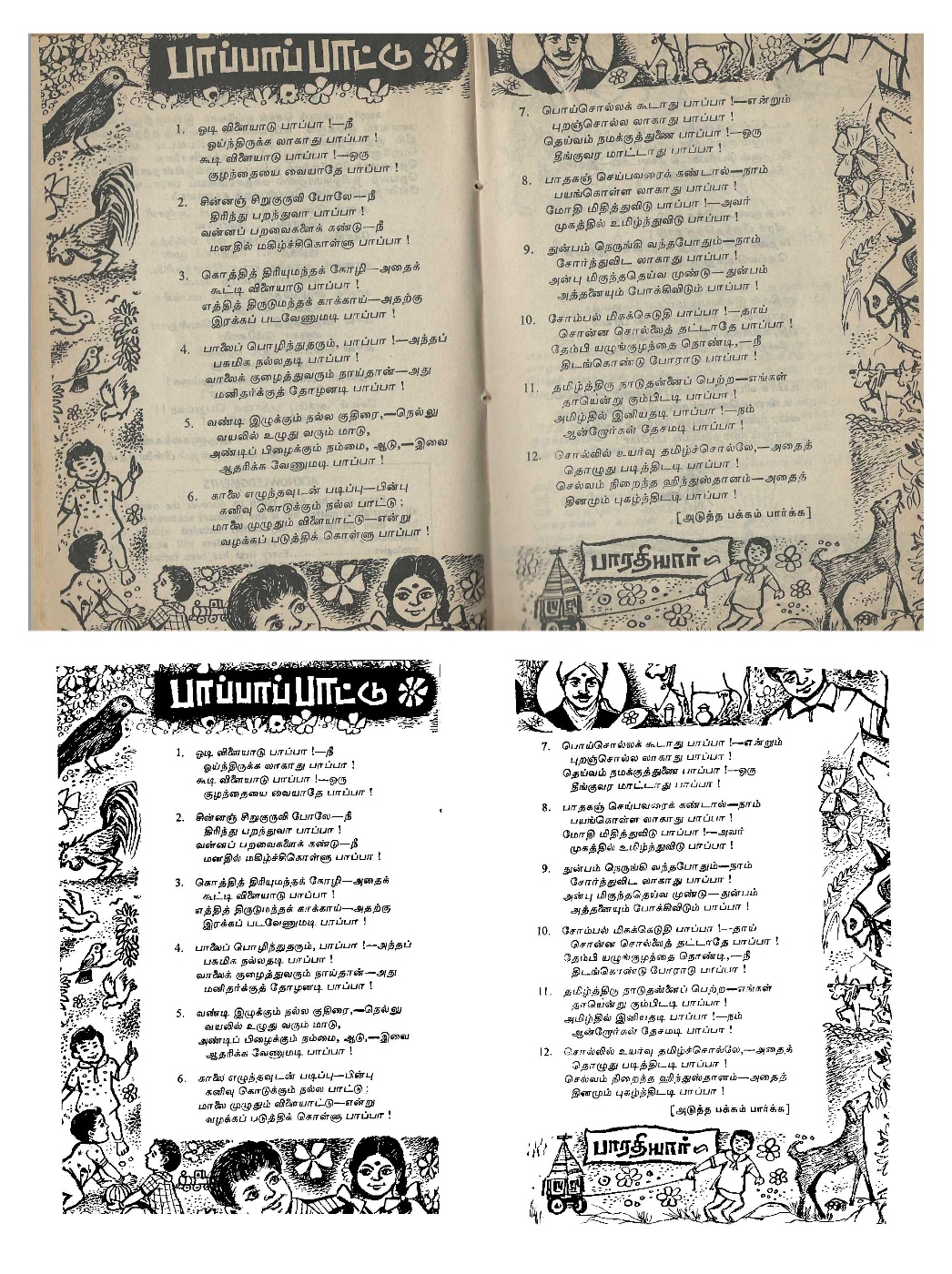

The other day I was trying to scan (to preserve) an 80-year-old book of over 150 pages. I got all the pages scanned, but the output was two facing pages and looked unimpressive. I used Scan Tailor to split pages (from two facing pages to single pages), straighten up the pages, despeckle (remove the non-text/image areas like the brown paper background) and then output clean sharp looking pages.

Above is a sample page(s): on top is the scanned page I started with, and at the bottom are the two (output) pages after using Scan Tailor.

Hello Sir,

You solved a problem that I have been struggling with for quite some time. I really appreciate your step-by-step instruction which motivated me to attempt it.

When I was discussing my problem with a friend of mine, he sent me this link.

https://venkatarangan.com/blog/2019/09/python-and-google-cloud-vision-for-tamil-text/

That method was a bit too much for me since I have no exposure to Python. Then luckily it occurred to me to search your blog for any other such article on OCR. I searched and found this blog post. Since it involved only a few batch files, I felt emboldened to attempt it.

I tried and succeeded without much problem. I did have some hiccups on the way, but I figured out where I was going wrong.

Thanks once again for sharing your knowledge.

Regards,

Sundar Rajan

Glad to hear it worked out for you. We get so much of our information and tips from the Internet and to fulfil our karma we need to share with others. That’s why I started writing my blog 17 years ago and it has surprisingly grown to over 3000+ posts. I found it to be a destressing tool. Thanks.

Thank you Mr. Rangan,

I dug through your blog and found there were many topics of common interest. I have also travelled the same route, starting with HPBasic, GWBasic, QB, VB5 & 6. I never felt comfortable with VB.NET and never bothered to migrate. VB5 (not even VB6 !) is still my best companion. I am so addicted to VB that I just can’t move on to any other language. I keep looking all the time for some cross platform VB equivalent. I tried B4X and was quite impressed by it. I do want to move on to Python.

Now, let me come to my real issue, which landed me in this blog.

A friend of mine has authored a number of Tamil books. They are intended for free distribution. You can have a look at them here.

https://drive.google.com/drive/folders/1iG2EoY0R6okgIGsByxAI4D2eMJ6dcak0?usp=sharing

Since i had started learning B4A, I thought it would be nice idea to write an App embedding all these books.

The inspiration came from an App by Mr. Sudhakar Kanakaraj,

https://play.google.com/store/apps/details?id=com.whiture.apps.tamil.kalki.ponniyin

I think he has converted many of the classic books from Project Madurai as Android Apps.

The books that i wanted to convert are all there in PDF format. There is no need to scan them.

I could have just packed all the books into the App and left it to the reader to use whatever PDF reader he was comfortable with. But I didn’t like the pinching, enlarging and scrolling to view the PDF contents, in a comfortable font size, in that small phone window.

I wanted the font size to be variable and the text to re-flow and adjust automatically to the screen size just it is happens in Sudhakar’s apps.

So the task was to convert the PDF into HTML. I tried Calibre and it was miserable failure. Calibre’s output was completely unreadable.

Realization dawned upon me it is not going to be all that easy to accomplish what I set out to, because of my total ignorance of Tamil fonts, encoding, conversion, etc.

The books had been created in Pagemaker with numerous non-unicode fonts.

The first task therefore was to first to convert the text into Unicode.

With your code, this first step has been achieved.

This itself was a big hurdle until now.

It was too much of a hassle uploading the images to Google Drive, opening it as GoogleDoc, wait for it to recognize, then copy the text, paste to another doc and so on.

Now comes the next problem

Most of the books have been formatted in the two column layout. Just as an example, you can check the book “amma, appa part – 3.pdf”. That is the one i successfully converted yesterday.

I have to now strip just the title (if there is one), the text in the first column, then the text in the second column, move on to the next page and so on.

Any suggestions as to how to go about automating this ?

Is this the right approach ?

Is there a better way to do this ?

I would appreciate some guidance from you on this.

Regards,

Sundar Rajan

I read your comment with interest. I also checked a couple of PDFs from the Google Drive – I found interesting works like the book on travel tips for first time traveller. All the best to your author friend.

What you described is a widespread problem for (almost) all publishers/authors in Tamil Nadu, due to the usage of Non-Unicode fonts and non-standard processes. Why it is happening is a set of many stories – if you wish to know the background, search my blog for Tamil Unicode.

Coming to your problem at hand, the best approach will be to start with the original Pagemaker files, save/convert them into Word/Text/HTML like formats. Then use a tool like NHM Convertor (https://indiclabs.in/products/converter/) to convert them to Unicode and go from there. Most often this step will be a difficult one, as typesetters use multiple fonts within the same file and they won’t convert automatically, you need to either write custom scripts or do manual work.

The other approach is to use a good OCR like Tesseract that I have described in my other post, automate the conversion from PDF to text format. This can be automated with simple Python scripts. If you spend a bit of time to understand about Tesseract settings and then tweak the parameters, you may bet better accuracy. After this do a manual check and you will now have Unicode ready text/HTML files to work on. For posterity and search engine friendly, embed the Unicode text in the PDF files and then make them available on the Internet for Google to crawl.

To know more on this subject, you may want to follow: https://groups.google.com/g/pmadurai, https://twitter.com/tshrinivasan and https://twitter.com/ezhillang amongst others.

Lastly, if you wish to engage a professional (freelancer) help to do this work, I will recommend my friend Mr K S Nagarajan, developer of the popular NHM Writer Keyboard. You can reach him at https://www.linkedin.com/in/ksnagarajan/

Thank you very much. I will follow the leads given by you.

I did obtain the pagemaker file for “amma, appa part – 3.pdf” along with some sample fonts, just for experimenting. But I don’t intend to continue that route. I would like the issue to be more generic and let the starting point be PDF.

As regards to engaging your friend, I would rather not. This is a learning adventure. I wouldn’t want to lose the opportunity. But in case he has a blog on this subject, I would definitely be interested.

I will certainly go through your blog posts. I found lots of interesting stuff. I have already subscribed also.

Regards,

Sundar Rajan

Hello Mr. Venkatarangan,

I thought I should share this happy discovery with you.

I had to just change the output format of Tesseract to txt to get the full text extracted, IN THE PROPER SEQUENCE (entire left column, followed by entire right column, etc)

If there is a way to upload files, I could have uploaded the original page and the converted text. Can I have your email id?

Thanks a lot.

I found the blog of TShrinivasan very informative. I also happened to see your correspondence with him.

I am very happy to have come in contact with you. It has opened many avenues for me.

Regards,

Sundar Rajan

Hello Venkatarangan, We are doing a study on open sources OCR engines of south Indian languages. Would you be able to give us some insights about Tamil OCR? How can I contact you?

I have DM’ed my email to you.